library(bpcs)

library(ggplot2)

library(dplyr)

library(tibble)

library(kableExtra)

library(bayesplot)

library(knitr)Getting started

In this vignette, we provide an example of the usage of the bpcs package along with the core concepts to use the package.

Installation

The package requires installation of the rstan package (Stan Development Team 2020). For more details see the REAMDE.md document.

To install the latest version from Github:

remotes::install_github('davidissamattos/bpcs')After install we load the package with:

Introduction

The bpcs package performs Bayesian estimation of Paired Comparison models utilizing Stan. We provide a series of models and auxiliary functions to help in the analysis and evaluation of the models. However, this package have the philosophy of ‘batteries not included’ for plots, tables and data transformation. There are already many great packages capable of performing create high quality plots, tables and that provides tools for data transformation. Since each user can have their own preferences, customization needs and data cleaning and transformation workflows, we designed not to enforce any particular framework or package. Our functions were designed to return cleaned data frames that can be used almost directly, or with few transformations in those packages.

At the moment, the only exception to this is the expand_aggregated_data function that receives a data frame with the number of wins for player 1 and the numbers of wins for player 2 and expand this aggregated data into a single match per row (that is required for our models). We include this function because this type of transformation is common since packages such as BradleyTerry2 (Turner, Firth, and others 2012) receives this type of aggregated data and many available datasets are presented like that.

With that said, we provide in the vignettes the code we use to transform the data and generate the tables and plots. The user is free to use/copy/modify these codes for their own use. For those we rely on the collection of packages tidyverse (Wickham et al. 2019), and the packages knitr (Xie 2014) and kableExtra (Zhu 2020).

Tennis example

In this example, we will use the example from tennis players from Agresti (Agresti 2003). The data tennis_agresti contains the information regarding tennis matches between 5 players, and who won the match, 0 for player0 or 1 for player1.

knitr::kable(tennis_agresti) %>%

kableExtra::kable_styling()| player0 | player1 | y | id |

|---|---|---|---|

| Seles | Graf | 0 | 1 |

| Seles | Graf | 0 | 2 |

| Seles | Graf | 1 | 3 |

| Seles | Graf | 1 | 4 |

| Seles | Graf | 1 | 5 |

| Seles | Sabatini | 0 | 6 |

| Seles | Navratilova | 0 | 7 |

| Seles | Navratilova | 0 | 8 |

| Seles | Navratilova | 0 | 9 |

| Seles | Navratilova | 1 | 10 |

| Seles | Navratilova | 1 | 11 |

| Seles | Navratilova | 1 | 12 |

| Seles | Sanchez | 0 | 13 |

| Seles | Sanchez | 0 | 14 |

| Graf | Sabatini | 0 | 15 |

| Graf | Sabatini | 0 | 16 |

| Graf | Sabatini | 0 | 17 |

| Graf | Sabatini | 0 | 18 |

| Graf | Sabatini | 0 | 19 |

| Graf | Sabatini | 0 | 20 |

| Graf | Sabatini | 1 | 21 |

| Graf | Sabatini | 1 | 22 |

| Graf | Sabatini | 1 | 23 |

| Graf | Navratilova | 0 | 24 |

| Graf | Navratilova | 0 | 25 |

| Graf | Navratilova | 0 | 26 |

| Graf | Sanchez | 0 | 27 |

| Graf | Sanchez | 0 | 28 |

| Graf | Sanchez | 0 | 29 |

| Graf | Sanchez | 0 | 30 |

| Graf | Sanchez | 0 | 31 |

| Graf | Sanchez | 0 | 32 |

| Graf | Sanchez | 0 | 33 |

| Graf | Sanchez | 1 | 34 |

| Sabatini | Navratilova | 0 | 35 |

| Sabatini | Navratilova | 1 | 36 |

| Sabatini | Navratilova | 1 | 37 |

| Sabatini | Sanchez | 0 | 38 |

| Sabatini | Sanchez | 0 | 39 |

| Sabatini | Sanchez | 0 | 40 |

| Sabatini | Sanchez | 1 | 41 |

| Sabatini | Sanchez | 1 | 42 |

| Navratilova | Sanchez | 0 | 43 |

| Navratilova | Sanchez | 0 | 44 |

| Navratilova | Sanchez | 0 | 45 |

| Navratilova | Sanchez | 1 | 46 |

We can fit a Bayesian Bradley-Terry model using the bpc function

m1 <- bpc(data = tennis_agresti,

player0 = 'player0',

player1 = 'player1',

result_column = 'y',

model_type = 'bt',

solve_ties = 'none', #there are no ties

show_chain_messages = T)

#> Running MCMC with 4 parallel chains...

#>

#> Chain 1 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 1 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 2 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 2 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 3 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 3 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 4 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 4 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 1 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 1 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 2 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 2 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 3 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 3 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 4 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 1 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 2 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 4 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 1 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 1 Iteration: 1001 / 3000 [ 33%] (Sampling)

#> Chain 2 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 2 Iteration: 1001 / 3000 [ 33%] (Sampling)

#> Chain 3 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 4 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 3 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 3 Iteration: 1001 / 3000 [ 33%] (Sampling)

#> Chain 4 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 4 Iteration: 1001 / 3000 [ 33%] (Sampling)

#> Chain 2 Iteration: 1200 / 3000 [ 40%] (Sampling)

#> Chain 1 Iteration: 1200 / 3000 [ 40%] (Sampling)

#> Chain 3 Iteration: 1200 / 3000 [ 40%] (Sampling)

#> Chain 4 Iteration: 1200 / 3000 [ 40%] (Sampling)

#> Chain 1 Iteration: 1400 / 3000 [ 46%] (Sampling)

#> Chain 2 Iteration: 1400 / 3000 [ 46%] (Sampling)

#> Chain 3 Iteration: 1400 / 3000 [ 46%] (Sampling)

#> Chain 4 Iteration: 1400 / 3000 [ 46%] (Sampling)

#> Chain 1 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 2 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 4 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 3 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 1 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 2 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 4 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 1 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 2 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 3 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 4 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 3 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 1 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 2 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 4 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 3 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 1 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 4 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 2 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 3 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 1 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 4 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 2 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 3 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 1 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 2 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 4 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 3 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 4 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 4 finished in 3.4 seconds.

#> Chain 1 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 2 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 3 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 1 finished in 3.5 seconds.

#> Chain 2 finished in 3.5 seconds.

#> Chain 3 finished in 3.5 seconds.

#>

#> All 4 chains finished successfully.

#> Mean chain execution time: 3.5 seconds.

#> Total execution time: 3.8 seconds.Diagnostics

After the chain converges to find the result we can investigate if everything went right. For that we can use the excellent tool provided in the shinystan (Gabry 2018) package that helps to assess the convergence of the chains.

The bpcs package provides a tiny wrapper to launch it automatically with some default parameters.

launch_shinystan(m1)If you prefer to investigate without shinystan we can retrieve the cmdstanr fit object and investigate ourselves or with the help of the bayesplot package (Gabry et al. 2019). The indexes in Stan refer to the names and indexes available at the lookup table.

knitr::kable(m1$lookup_table)| Names | Index |

|---|---|

| Seles | 1 |

| Graf | 2 |

| Sabatini | 3 |

| Navratilova | 4 |

| Sanchez | 5 |

fit <- get_fit(m1)

posterior_draws <- posterior::as_draws_matrix(fit$draws())Getting the traceplots:

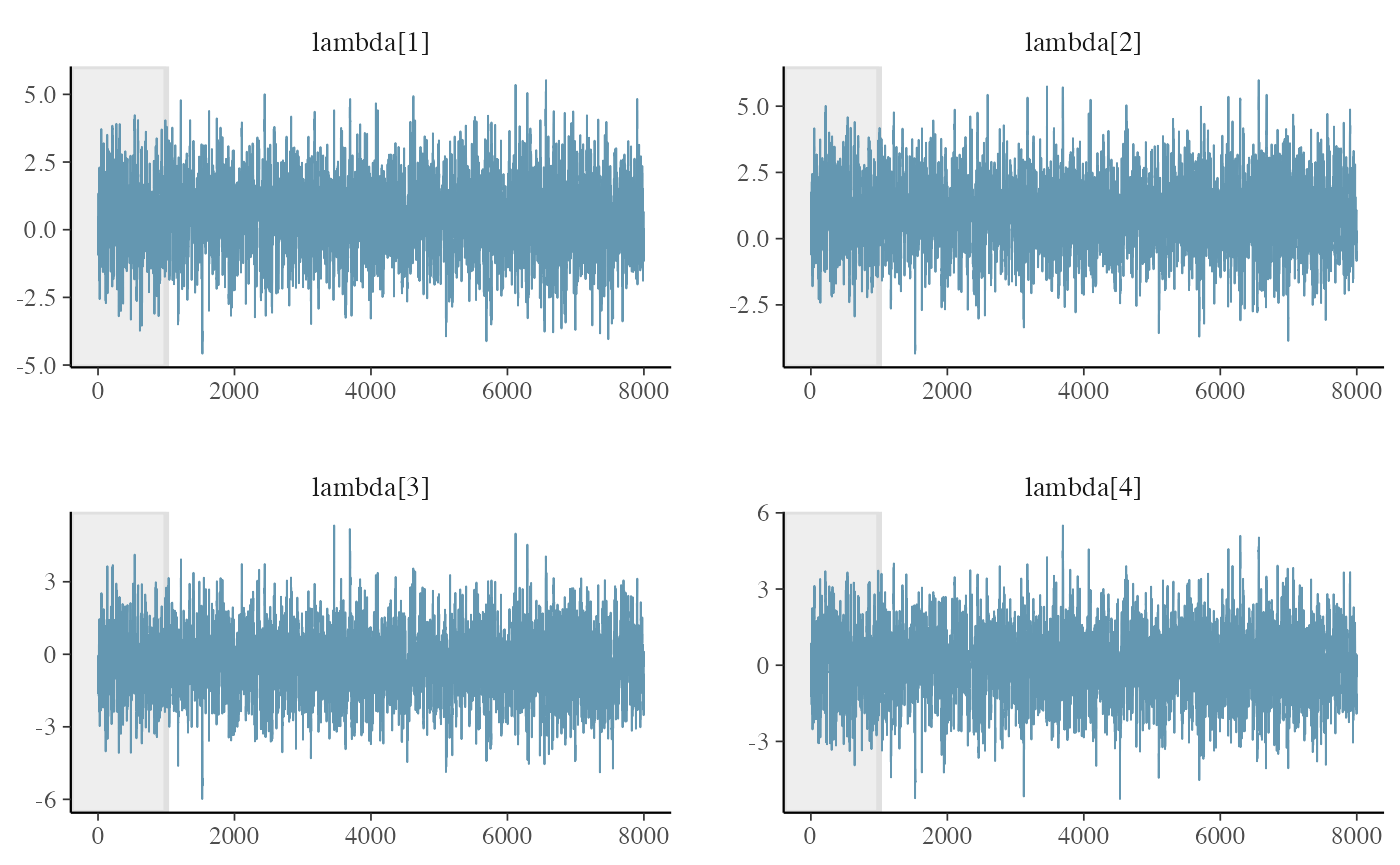

bayesplot::mcmc_trace(posterior_draws,pars = c("lambda[1]","lambda[2]","lambda[3]","lambda[4]"), n_warmup=1000)

Verifying the Rhat and neff

get_parameters_df(m1, n_eff = T, Rhat = T)

#> Parameter Mean Median HPD_lower HPD_higher Rhat

#> 1 lambda[Seles] 0.463208079 0.45433550 -2.24406 3.19640 1.0014

#> 2 lambda[Graf] 0.893729645 0.87886600 -1.79121 3.56240 1.0021

#> 3 lambda[Sabatini] -0.381270200 -0.37169750 -3.08218 2.36505 1.0011

#> 4 lambda[Navratilova] -0.004260618 0.00498822 -2.69949 2.75597 1.0013

#> 5 lambda[Sanchez] -1.166202402 -1.16131500 -3.90330 1.56671 1.0015

#> n_eff

#> 1 1579

#> 2 1535

#> 3 1587

#> 4 1544

#> 5 1589Predictive posterior

We first get the observed values and then the predictive values of the original dataframe. We can get predictive values with the predictive function and passing a data frame with the values we want to predict (in this case the original one). Note that we need to have the same column names in this new data frame

y_pp <- posterior_predictive(m1)

y<-as.vector(y_pp$y)

y_pred <- y_pp$y_pred

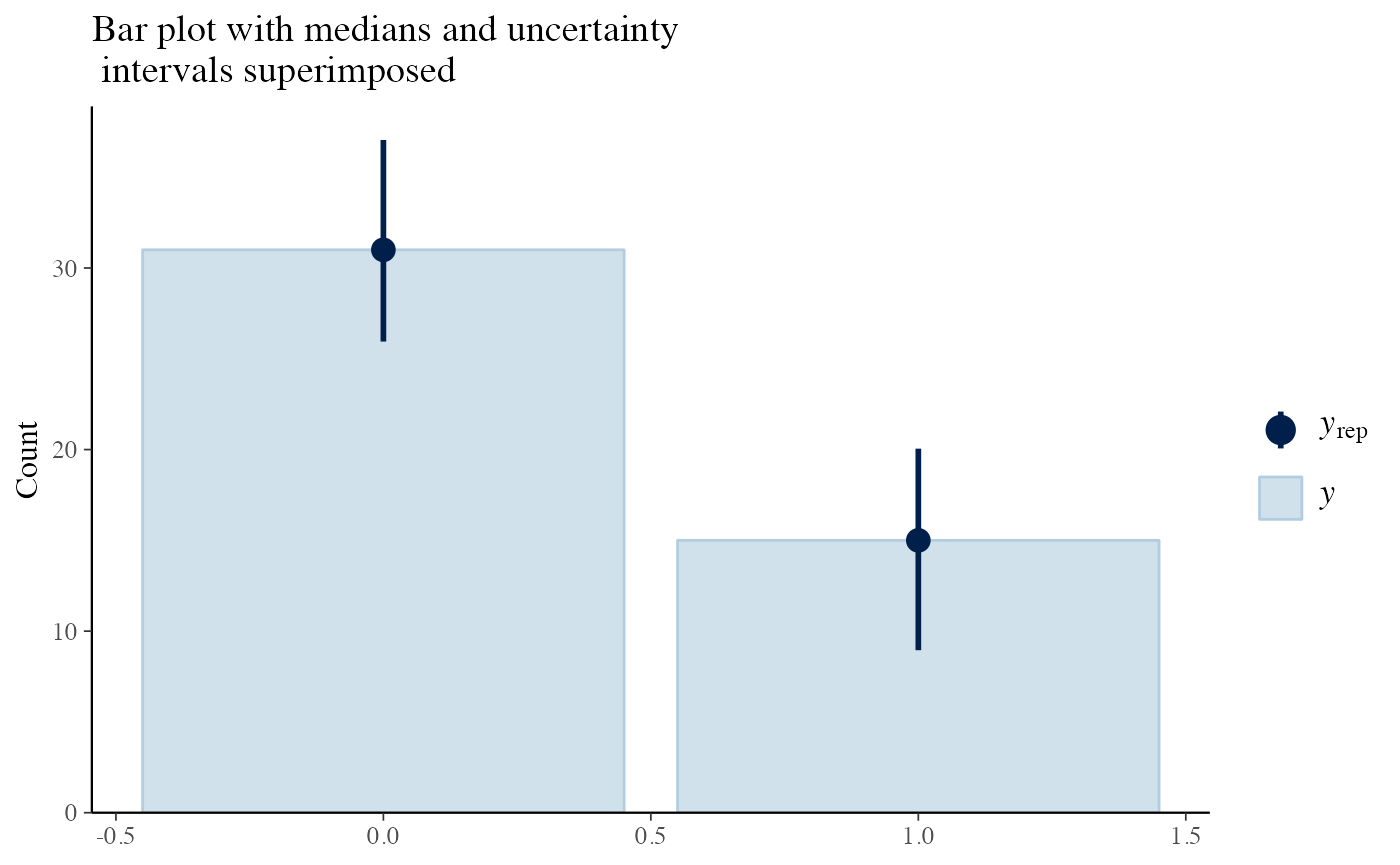

bayesplot::ppc_bars(y=y, yrep=y_pred) +

labs(title = 'Bar plot with medians and uncertainty\n intervals superimposed')

The plots indicate a good model as the predictive posterior and the observed values agree largely.

Parameter tables and plots

Now that we are confident that our model is correct, we can create some tables to report our results.

To see the results in the console the summary function provides a good overview of the model. With parameters, probability of winning and a ranking.

summary(m1)

#> Estimated baseline parameters with 95% HPD intervals:

#>

#> Table: Parameters estimates

#>

#> Parameter Mean Median HPD_lower HPD_higher

#> -------------------- ------ ------- ---------- -----------

#> lambda[Seles] 0.46 0.45 -2.24 3.20

#> lambda[Graf] 0.89 0.88 -1.79 3.56

#> lambda[Sabatini] -0.38 -0.37 -3.08 2.37

#> lambda[Navratilova] 0.00 0.00 -2.70 2.76

#> lambda[Sanchez] -1.17 -1.16 -3.90 1.57

#> NOTES:

#> * A higher lambda indicates a higher team ability

#>

#> Posterior probabilities:

#> These probabilities are calculated from the predictive posterior distribution

#> for all player combinations

#>

#>

#> Table: Estimated posterior probabilites

#>

#> i j i_beats_j j_beats_i

#> ------------ ------------ ---------- ----------

#> Graf Navratilova 0.65 0.35

#> Graf Sabatini 0.76 0.24

#> Graf Sanchez 0.88 0.12

#> Graf Seles 0.59 0.41

#> Navratilova Sabatini 0.55 0.45

#> Navratilova Sanchez 0.67 0.33

#> Navratilova Seles 0.36 0.64

#> Sabatini Sanchez 0.69 0.31

#> Sabatini Seles 0.37 0.63

#> Sanchez Seles 0.25 0.75

#>

#> Rank of the players' abilities:

#> The rank is based on the posterior rank distribution of the lambda parameter

#>

#> Table: Estimated posterior ranks

#>

#> Parameter MedianRank MeanRank StdRank

#> ------------ ----------- --------- --------

#> Graf 1 1.42 0.66

#> Seles 2 2.10 0.91

#> Navratilova 3 2.97 0.89

#> Sabatini 4 3.69 0.82

#> Sanchez 5 4.81 0.45If we want to create nicer tables and export them to latex/html we can leverage this with the kable function and the kableExtra package. Note that for extensive customization (and examples) we refer to the packages documentation.

Parameter table with HPD intervals

get_parameters_table(m1, format='html') %>%

kable_styling()| Parameter | Mean | Median | HPD_lower | HPD_higher |

|---|---|---|---|---|

| lambda[Seles] | 0.463 | 0.454 | -2.244 | 3.196 |

| lambda[Graf] | 0.894 | 0.879 | -1.791 | 3.562 |

| lambda[Sabatini] | -0.381 | -0.372 | -3.082 | 2.365 |

| lambda[Navratilova] | -0.004 | 0.005 | -2.699 | 2.756 |

| lambda[Sanchez] | -1.166 | -1.161 | -3.903 | 1.567 |

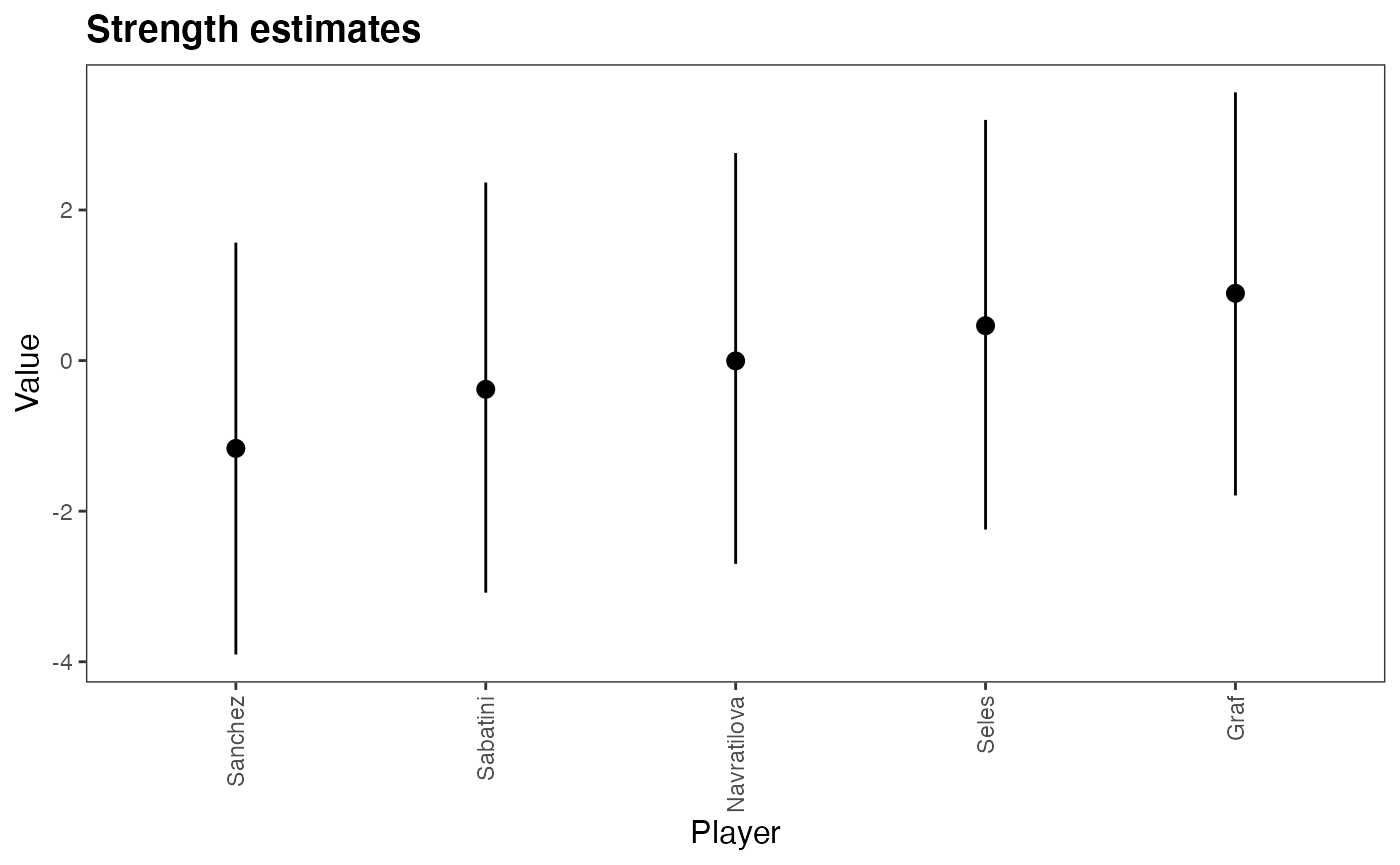

Plot the HPD intervals of the strength

plot(m1, rotate_x_labels = T)

get_probabilities_table(m1, format='html') %>%

kableExtra::kable_styling()| i | j | i_beats_j | j_beats_i |

|---|---|---|---|

| Graf | Navratilova | 0.76 | 0.24 |

| Graf | Sabatini | 0.77 | 0.23 |

| Graf | Sanchez | 0.94 | 0.06 |

| Graf | Seles | 0.53 | 0.47 |

| Navratilova | Sabatini | 0.58 | 0.42 |

| Navratilova | Sanchez | 0.65 | 0.35 |

| Navratilova | Seles | 0.40 | 0.60 |

| Sabatini | Sanchez | 0.63 | 0.37 |

| Sabatini | Seles | 0.29 | 0.71 |

| Sanchez | Seles | 0.16 | 0.84 |

We might also be interested in ranking the players based on their ability \(lambda\). In the Bayesian case, we sample the posterior distribution of \(lambda\) and rank them so we have posterior distribution of the ranks.

We can produce a table with the values of this dataframe.

get_rank_of_players_table(m1, format='html') %>%

kable_styling()| Parameter | MedianRank | MeanRank | StdRank |

|---|---|---|---|

| Graf | 1 | 1.376 | 0.625 |

| Seles | 2 | 2.141 | 0.894 |

| Navratilova | 3 | 2.978 | 0.909 |

| Sabatini | 4 | 3.699 | 0.796 |

| Sanchez | 5 | 4.806 | 0.476 |

Predicting results

To predict new results we need a data frame similar to the one used to fit the data. We use the same function as in the predicted posterior but now we provide the data we want to predict instead of the original data. Lets predict who is the winner for all games from Seles. Now we don’t want to return the matrix but a data frame

tennis_new_games<- tibble::tribble(~player0, ~player1,

'Seles', 'Graf',

'Seles', 'Sabatini',

'Seles', 'Navratilova',

'Seles', 'Sanchez')

y_seles<-predict(m1,tennis_new_games,n=100,return_matrix = T)

print(head(y_seles))

#> y_pred[1] y_pred[2] y_pred[3] y_pred[4]

#> [1,] 1 1 1 0

#> [2,] 0 0 0 0

#> [3,] 0 0 0 0

#> [4,] 0 0 0 0

#> [5,] 1 0 1 0

#> [6,] 0 0 0 0We can process this predictive posterior as desired. The summary function already gives the predictive probabilities to facilitate.

References

Agresti, Alan. 2003. Categorical Data Analysis. Vol. 482. John Wiley & Sons.

Gabry, Jonah. 2018. Shinystan: Interactive Visual and Numerical Diagnostics and Posterior Analysis for Bayesian Models. https://CRAN.R-project.org/package=shinystan.

Gabry, Jonah, Daniel Simpson, Aki Vehtari, Michael Betancourt, and Andrew Gelman. 2019. “Visualization in Bayesian Workflow.” J. R. Stat. Soc. A 182 (2): 389–402.

Stan Development Team. 2020. “RStan: The R Interface to Stan.” https://mc-stan.org/.

Turner, Heather, David Firth, and others. 2012. “Bradley-Terry Models in R: The Bradleyterry2 Package.” Journal of Statistical Software 48 (9).

Wickham, Hadley, Mara Averick, Jennifer Bryan, Winston Chang, Lucy D’Agostino McGowan, Romain François, Garrett Grolemund, et al. 2019. “Welcome to the tidyverse.” Journal of Open Source Software 4 (43): 1686.

Xie, Yihui. 2014. “Knitr: A Comprehensive Tool for Reproducible Research in R.” In Implementing Reproducible Computational Research, edited by Victoria Stodden, Friedrich Leisch, and Roger D. Peng. Chapman; Hall/CRC.

Zhu, Hao. 2020. KableExtra: Construct Complex Table with ’Kable’ and Pipe Syntax. https://CRAN.R-project.org/package=kableExtra.